Patient History

In 2018 a woman named April Burrell awoke from a 2-decades long catatonic state. Diagnosed with a severe form of schizophrenia at 21, April had spent the next 20 years of her life in a psychiatric institution. It was there, at Pilgrim Psychiatric Center in New York, that April first crossed paths with a young medical student named Sander Markx. Though, of course, she did not know it. As Markx later described her state, “She would just stare and just stand there. She wouldn’t shower, she wouldn’t go outside, she wouldn’t smile, she wouldn’t laugh. And the nursing staff had to physically maneuver her.” At the time there was nothing he could do to help her, but even as Markx moved forward in his career and became the director of precision psychiatry at Columbia University, he never forgot the young woman he had encountered at the nurses’ station at Pilgrim. April “was the first person I ever saw as a patient,” Markx told the Washington Post in 2023.

Almost two decades later, Markx had a lab of his own. He encouraged one of his research fellows to work in the trenches and suggested he spend time with patients at Pilgrim, just as he had done years earlier.

In an extraordinary coincidence, the trainee, Anthony Zoghbi, encountered a catatonic patient, standing at the nurse’s desk. The fellow returned to Markx, shaken up, and told him what he had seen.

“It was like déjà vu because he starts telling the story,” said Markx. “And I’m like, ‘Is her name April?’”

Markx was stunned to hear that little had changed for the patient he had seen nearly two decades earlier. In the years since they had first met, April had undergone many courses of treatment — antipsychotics, mood stabilizers and electroconvulsive therapy — all to no avail.

Markx was able to get family consent for a full medical work-up…. Her bloodwork showed that her immune system was producing copious amounts and types of antibodies that were attacking her body. Brain scans showed evidence that these antibodies were damaging her brain’s temporal lobes, brain areas that are implicated in schizophrenia and psychosis.

Even though April had all the clinical signs of schizophrenia, the team believed that the underlying cause was lupus, a complex autoimmune disorder where the immune system turns on its own body…. The autoimmune disease, it seemed, was a specific biological cause — and potential treatment target — for the neuropsychiatric problems April faced.

The medical team set to work counteracting April’s rampaging immune system and started April on an intensive immunotherapy treatment for neuropsychiatric lupus. Every month for six months, April would receive short, but powerful “pulses” of intravenous steroids for five days, plus a single dose of cyclophosphamide, a heavy-duty immunosuppressive drug typically used in chemotherapy and borrowed from the field of oncology. She was also treated with rituximab, a drug initially developed for lymphoma.

April started showing signs of improvement almost immediately.

As part of a standard cognitive test known as the Montreal Cognitive Assessment (MoCA), she was asked to draw a clock — a common way to assess cognitive impairment. Before the treatment, she tested at the level of a dementia patient, drawing indecipherable scribbles.

But within the first two rounds of treatment, she was able to draw half a clock — as if one half of her brain was coming back online, Markx said.

Following the third round of treatment a month later, the clock looked almost perfect.

At the time, Markx had to travel home to the Netherlands. On the day Markx was scheduled to fly out, he entered the hospital one last time to check on his patient, who he typically found sitting in the dining room in her catatonic state.

But when Markx walked in, April didn’t seem to be there. Instead, he saw another woman sitting in the room.

“It didn’t look like the person I had known for 20 years and had seen so impaired,” Markx said. “And then I look a little closer, and I’m like, ‘Holy s — -. It’s her.’”

After more than two decades under the psychiatric diagnosis of schizophrenia, within months of starting treatment for the autoimmune disorder that had been ravaging her brain all along — April woke up.

Elke Martinez was a veterinary technician who developed muscle and joint pain, headaches, fatigue, and gastrointestinal problems. When she went to her primary care doctor, he took one look at her medical record in the Kaiser Permanente healthcare system, and attributed her physical symptoms to the psychiatric diagnosis that he saw already in her chart – depression and anxiety. Martinez was already receiving treatment for those issues, and it was effective; she knew this was something else. But what could she do? As Julie Rehmeyer writes in OpenMind:

What Martinez did was humor her doctor. She attended Kaiser’s group cognitive behavioral therapy classes. The classes didn’t improve any of her symptoms, but they did consume a lot of her time and energy. Meanwhile, she saw more doctors to try to figure out what was actually wrong, but every Kaiser-affiliated doctor asked her about the psychiatric diagnosis already in her chart. “You can see on their face that they’re already checked out,” she says. These experiences undermined not only her trust in her doctors, but also in herself: “You get told this enough and you start to believe it and doubt yourself.”

Martinez realized that the only way she was going to get a proper diagnosis of her physical symptoms was by leaving the Kaiser system, so that she could go to a new set of doctors who couldn’t see the psychiatric misdiagnosis in her chart. Thirteen years after her symptoms started, she finally got an explanation: She has Ehlers-Danlos Syndrome, a disorder of the connective tissue that can cause devastating symptoms throughout the body. By the time she received a proper diagnosis, she was disabled and had to give up the career she loved in veterinary work. And she was luckier than many. On average, with a psychiatric misdiagnosis, it typically takes patients 22 years to get diagnosed with Ehlers-Danlos Syndrome.

When one U.S.-based patient I interviewed, who requested anonymity, was erroneously diagnosed with Munchausen’s syndrome — meaning that she was accused of fabricating her illness — she became unable to get medication for her severe pain for several months, while her therapist worked to persuade the psychologist who diagnosed her to remove it from her chart. In the meantime, she resorted to taking large doses of Ibuprofen, which resulted in a stomach ulcer.

The Medical Record

Medicine’s history is rife with cases of “mysterious” maladies being dismissed as mental illnesses. In the early 1900s Multiple Sclerosis was referred to as the “faker’s disease.” It wasn’t until the 1980s that MRI technology made the brain lesions of MS visible. And it wasn’t until 2022 that researchers identified how Multiple Sclerosis is triggered by the Epstein Barr Virus, which tricks the immune system into attacking the body’s own nerve cells.

The medical tendency to write off physiological disorders as psychological problems is a manifestation of a deeper human bias to cast the sufferer as the source of their own pain. In ancient times, disease and misery were believed to be retribution by the gods that humans brought upon themselves through their sins or crimes or lack of fealty. The Ancient Greek physician, Hippocrates, “the father of modern medicine,” disentangled the ailments of the body from the wrath of the gods, and yet he reduced all female afflictions down to “hysteria,” from the Greek word for womb. The idea that the female body is responsible for all women’s dysfunctions is as old as “modern medicine” itself.

Later, another father, this time of psychoanalysis, extended “hysteria” into a bonafide diagnosis. For Sigmund Freud, hysteria was the result of psychological issues that are unconsciously “converted” into physical symptoms. Although “hysteria” was removed from the Diagnostic and Statistical Manual of Mental Disorders in 1980, psychological conditions that are converted into bodily symptoms with no biological cause can be Dx’d as a Conversion Disorder, or Functional Neurological Disorder (ICD-10 diagnosis codes F44.4 to F44.9) and entered into a patient’s medical record and billed to insurance for treatment today. Through this lens literally any symptom could have a psychological origin and therefore even looking for an alternate, biological explanation is a fruitless task.

Although medical science is only just beginning to uncover the details of how the brain, mind, and nervous system do, of course, affect the body in the most profound ways, (within just the last few months researchers have identified the part of the brain that regulates the immune system 😳 and the neural circuit basis of the placebo effect 😱) the nature of chronic conditions in particular lends itself to psychologization. These conditions are often intractably resistant to whatever scant, “approved” treatment options are available, or may even be worsened by them. Patients whose symptoms go through cycles of flare and remission are non-compliant with the mandate to present their sickness in a reliably consistent way. And when lab results keep coming back “normal” yet difficult patients refuse to accept this and get better…. Well, only someone mentally ill would want to be sick.

Many postviral illnesses are often characterized by chronic dysregulation of autonomic body functions and systems, from the ones that regulate energy production and exertion recovery, to circulation, balance, breathing, and the brain’s ability to do stuff at all. Myalgic Encephalomyelitis/Chronic Fatigue Syndrome (ME/CFS) is a quintessential example of this type of disorder. Typically brought on in the aftermath of a viral infection, the disease has no widely accepted biomarkers (though research is underway) and no approved treatment, but it does have a disease burden double that of HIV/AIDS and more than half that of breast cancer while also being the most underfunded for research by the NIH. Not for nothing, it also disproportionately affects women (hysterics!), with a ratio of nearly 2 to 1. ME/CFS was estimated to affect 1 - 2.5 million Americans in 2019, but after just the first three years of a mass, viral pandemic, 3.3 million adults in the U.S. had been diagnosed with ME/CFS, according to the CDC. Though “the actual numbers may be higher,” the CDC disclaims, “as previous studies suggest many people with ME/CFS have not been diagnosed.” Unsurprisingly, about half of people with Long COVID meet the diagnostic criteria for ME/CFS.

A big driver of the underdiagnosis is that prior to 2020 fewer than 1 out of 3 American medical schools even taught students about ME/CFS at all. “The U.S. has so few doctors who truly understand the disease and know how to treat it,” Ed Yong, who won a Pulitzer Prize for his reporting on the COVID-19 pandemic, wrote in The Atlantic in 2022, “That when they convened in 2018 to create a formal coalition, there were only about a dozen, and the youngest was 60. Currently, the coalition’s website lists just 21 names, of whom at least three have retired and one is dead.”

With a dearth of expert knowledge, and an overabundance of widespread medical misunderstanding has come a mass gaslighting of those suffering with ME/CFS as head cases whose own troubled minds have manifested their physical symptoms into reality. What George Monbiot, writing in the Guardian called, “The greatest medical scandal of the 21st century.” And it’s a scandal documented over and over in patient medical records. A diagnostic data set with a recurring, corrupted pattern, the volume of its repetition increasing the confidence of its own correctness, validating the wrong answer over and over again.

In the case of April Burrell, it took a team of more than 70 experts from Columbia and around the world — neuropsychiatrists, neurologists, neuroimmunologists, rheumatologists, medical ethicists — to figure out that what had been treated as a psychiatric disorder for 20 years was in fact, a symptom of a type of lupus, and to unlock the pathway to recovery. April’s case is extreme, but it’s far from uncommon. “A 2014 survey by the Autoimmune Association,” Rehmeyer writes, “Found that 51 percent of patients with autoimmune disease report that they had been told that ‘their disease was imagined or they were overly concerned.’ And a 2019 survey of 4,835 patients with postural orthostatic tachycardia syndrome found that before getting a correct diagnosis, 77 percent of them had a physician suggest their symptoms were psychological or psychiatric.”

If 77% of people who are diagnosed with POTS were first misdiagnosed… first of all… that’s crazy. Imagine if 77% of people with prostate cancer were first misdiagnosed! But furthermore, that’s what’s in the medical record; that’s what’s in the diagnostic data set for patients presenting with POTS symptoms. And 77% of the time… it’s wrong.

AI Has Entered The Chart

In 2010, the Affordable Care Act kickstarted the digitization of Americans’ medical information, incentivizing medical practices to move from paper charts to Electronic Health Records (EHRs) with data stored in digital databases. In countries with national healthcare systems, patient information is often housed in a single, national EHR. The result of this transition has been the creation of a massive tranche of medical data. Digital patient charts follow people from literal birth, through every doctor’s visit and hospital stay, every infection and injury, every prescription and worrisome symptom, every time you didn’t quite feel right and every serious illness and that thing you just wanted your doctor to “check out”… in case.

The availability of this data allows us to see all kinds of patterns we simply could not have identified before – analyzing the electronic health records of half a million patients from Finland and the UK researchers were able to identify significant association for those who had had a viral infection documented in their chart and an increased risk of developing neurodegenerative disease such as Alzheimer’s as much as 15 years later; some of the most significant research into the long-term effects of COVID infection have come from work done by Ziyad Al-Aly, analyzing the data in America’s largest EHR system, used by the VA.

But EHR data also contains a record of medicine’s chronic bias of psychologizing biophysical dysfunction. Of getting POTS wrong 77% of the time. Of an average of 22 years of negative data on Ehlers-Danlos Syndrome diagnosis because a psychiatric diagnosis got there first. I wouldn’t go so far as to say the combined data of every EHR system is like medicine’s Akashic records – a compendium of all medical events to have occurred in the universe – but it’s the closest thing to it we’ve ever had. And every misdiagnosis in those records is not only wreaking havoc in a person’s life, but compounding into an unviable data set of years, decades of patient histories, embedding its false premise into every subsequent application of the data.

And then, AI arrived.

Within months of the release of ChatGPT, the computational process ravenously consuming all the content output of humanity was able to generate the answers required to pass the US medical licensing exam. A breathless stream of headlines proclaimed, “The AI Will See You Now.” On its heels, however, came the examples of the harm these advanced machine learning tools hyped as oracular consciousnesses can do – their misleading “hallucinations,” their recapitulation of human bias and pseudoscience, their bald-faced lies told with a big, American smile.

Undeniably, of course, artificial intelligence holds tremendous potential for the future of medicine. In an evaluation of 70 complex patient cases from the New England Journal of Medicine, ChatGPT was able to perform as well or better than expert clinicians on diagnosis. In his December 2023 TED talk on how AI can transform diagnostic practice, Dr. Eric Topol, Director & Founder of the Scripps Research Translational Institute, explained how he and the group at Moorfields Eye Institute, led by Pearse Keane, had developed the first open source foundation model in medicine from the retina. Using 1.6 million retinal images, their self-supervised learning model was able to predict the likelihood of eye disease, heart attacks, heart failure, strokes, and Parkinson’s disease. Previous studies using retinal images and supervised learning models had also been able to predict diabetes and blood pressure control, kidney disease, liver and gallbladder disease, hyperlipidemia, and Alzheimer's disease before any clinical symptoms have been manifest. When it comes to disease prediction, as Dr. Topol states, the retina “is the gateway to almost every system in the body.”

How does all this prediction happen?

As the researchers write, to train the AI, it was fed “a retrospective dataset that includes the complete ocular imaging records of 37,401 patients with diabetes who were seen at Moorfields Eye Hospital between January 2000 and March 2022.” In other words, the model was able to identify recurring graphical patterns in the retinal images attached to patient records containing a diabetes diagnosis. It was then able to evaluate the retinal images of other patients against these patterns, and make predictions. For the pattern matching to work, everything hinges on the training set to be accurate. For the retinal images of patients with a diabetes diagnosis in their chart to really have diabetes. Otherwise the predictions the AI will spit out become garbage in / garbage out. Diabetes, of course, can be determined from very clear biomarkers by looking at blood glucose levels. What happens when a disease is not so accommodating?

“As a matter of peculiar professional fact,” bioethicist Diane O’Leary and health psychologist Keith Geraghty state in the Oxford Handbook of Psychotherapy Ethics, “There is no term that names diagnostic uncertainty without also naming psychological diagnosis.”

“It’s psychological” is the diagnosis code for “I don’t know.”

As Rehmeyer writes:

Even today, doctors routinely use the term “medically unexplained symptom” to imply a psychological origin for a patient’s physiological reports. In UpToDate, a highly respected online guide for evidence-based treatment, a search for “medically unexplained symptoms” reroutes to an entry on somatization in psychiatry. Both the language and the culture of modern medicine systematically nudge some doctors toward the assumption that ambiguous symptoms are psychosomatic.

Psychological diagnoses are often the easiest ones for doctors to make, and the hardest ones for patients to shake. Once a psychological diagnosis is entered into a patient’s medical records, it becomes the starting place for every subsequent doctor who reads it.

In the era of machine learning and AI foundation models and transformers, who’s reading it will no longer be just a doctor.

Somewhere in the Kaiser system there may still be an old patient record for a woman with the symptoms of EDS diagnosed as depression hanging around. Contributing yet one more data point for a prediction engine to consume. What will a deep learning algorithm glean from this data?

Even as vestiges of “hysteria” wander around the corpus of EHR databases, machine learning models are being trained on these data sets. Will AI entrench and reify these biases into the future of medicine…. Or does it actually hold the potential to become a tool to counteract their effect?

Outcome-Based Evidence

For all the focus on GPT-4’s proficiency in diagnosis, and machine vision’s ability to spot disease even before it develops, what doesn’t get as much attention is the consideration of patient outcomes.

It’s all well and good to have a label for what ails you, but…. what then?

Did the treatment help?

For the patients who came to their doctors presenting with the symptoms of postural orthostatic tachycardia syndrome, for example, (the ones 77% of them had had a physician suggest were psychological or psychiatric) and were ultimately able to get relief, what treatment approaches yielded the best results?

In 2023, the startup Eureka Health collected a large dataset of Reddit posts from people discussing various treatment experiences as well as reports from the Eureka user community and processed it using GPT4 to analyze outcomes. Below is an example they shared on Twitter at the time on the effect of beta blockers for tachycardia, a heart rate faster than 100 beats per minute, and the T in POTS.

“For beta blockers,” they posted, “You see that nearly everyone had moderate or significant tachycardia benefit. They also help with anxiety, high blood pressure, heart palpitations, and more.”

Eureka also used the patient-reported data to identify side effects, compare brands and treatment types, dosages and treatment combinations, and more. At the time, the startup positioned itself as “The first community dedicated to trying new treatments together, and sharing reviews and data to advance our collective understanding.” With a special focus on poorly understood conditions such as Long COVID, ME/CFS, POTS, EDS, and MCAS, they offered users the ability to “Discover what the community is trying, and see the impact on people like you.” In 2024, Eureka pivoted to endocrine disorders such as thyroid conditions and diabetes. It now positions itself as “The world's first AI doctor.”

Patient self-reported information is, of course, incredibly useful, but the broader range of data available in an EHR offers many more signals for machine learning to analyze, and many more patterns to uncover from them. These kinds of data can include:

Lab results and imaging

Diagnosis and symptom histories, comorbidities, and changes over time

Standardized assessment screeners, questionnaire results, and risk scores

Specialist visits, and ER and hospital admissions

Medication tolerance, adherence, history, and combinations

Unlike diagnoses or symptoms or prescribed treatments, which are labeled with structured database codes that are easily legible for a computational engine, outcomes are not. If a patient with a chronic illness stops coming to their doctor, is it because their condition was resolved or went into remission, or did they lose faith that the doctor could help them? Or did their condition worsen such that they could not even make it in to their appointment at all? The doctor often doesn’t know, and neither does the system. As proficient as AI may be at diagnosis, connecting the multitude of disparate signals in a patient record to understand whether a treatment worked or whether it worked better than another approach or maybe even made things worse, and what constellations of factors contributed to any of those outcomes, is exactly the kind of gargantuan data processing and pattern matching task machine learning can do.

As Dr. Topol explained in his TED Talk, with GPT-4’s capacity to process over a trillion connections, we have “the ability to now be able to look at many more items, whether it be language or images, and be able to put this in context, setting up a transformational progress in many fields.”

As Molly Freedenberg, a friend with ME/CFS told me:

My doctor is CONSTANTLY telling me that he doesn't have any patients with the side effects I have from meds, but I'm in patient groups for PEOPLE WHO SEE THIS DOCTOR and I know for a fact that, like, a dozen people have had the same experience. So he clearly has some kind of bias where he's not recording or registering that information in his brain - or in his files - which means he not only doesn't warn us about those effects but treats us like we're possibly full of shit (or just magical unusual unicorn snowflakes he's sooooo baffled by) when we report issues. I would LOVE a system that takes some of the bias out of THAT.

I think us wanting to please our doctors - or literally needing to for survival reasons - is a HUGE problem in the medical field and finding a way around it would have huge implications for better outcomes. I know so many people who go to their doctor, say yes to treatments they know aren't right for them, etc. We lose so much time doing backflips for the doctors so they'll keep seeing us, for that 20% of the time they're actually treating what we need them to, the way we need them to.

Could an AI model looking at patient record data to understand outcome patterns – including side effects and contraindications that happen in the real world – be able to identify what that 20% of the time is? And then make that the frontline for clinical decision support?

There is, of course, one entity that is very interested in looking at outcomes, and that is insurance companies. The outcome they are interested in, however, is cost. This is why patients over a certain age are constantly being reminded to do mammogram screenings and colonoscopies, etc. Catching something precancerous is much less expensive than treating Stage 4. Reducing claims for costly, advanced cancer treatments is incredibly financially incentivizing to insurance companies. Incidentally, there is also a benefit to patients as well.

Insurance companies are already using AI to deny whatever it deems as “unnecessary” treatment. What if using AI to analyze outcome patterns, especially for the most complex, chronic illnesses, wasn’t just driven by financial incentives, but by patient care incentives. What could we find?

In December 2020, Congress provided the NIH with $1.15 billion to fund the Long Covid “RECOVER” initiative. Two and a half years later, that money had all been spent without a single clinical trial or promising drug candidate to show for it. Relatedly, there were no validated treatments for ME/CFS even before COVID arrived. But there is data left behind in patients’ charts, and some treatment approaches have proven more effective than others for managing symptoms. Patients are often left to their own devices scouring forums and patient groups to try to find these patterns on their own. In the absence of evidence-based outcomes, outcome-based evidence for many “mysterious” diseases is hiding in the data patterns of patient records. This is no replacement for the actual “Moonshot” research funding that these neglected diseases desperately need. But this data can help offer patients any options in the meantime, and perhaps even accelerate our understanding of the biophysical origins for a whole class of diseases that have long been misunderstood, or labeled “medically unexplained.”

In a sense, a diagnosis is just a human-defined construct. A “syndrome,” for example, is often just a term medicine uses to repackage a group of frequently co-occurring symptoms as a diagnosis unto itself. E.g. Polycystic Ovarian Syndrome (PCOS) is basically just a cluster of symptoms. You can get diagnosed with PCOS if you have a certain number of the syndrome’s symptoms even without having its eponymous ovarian cysts!

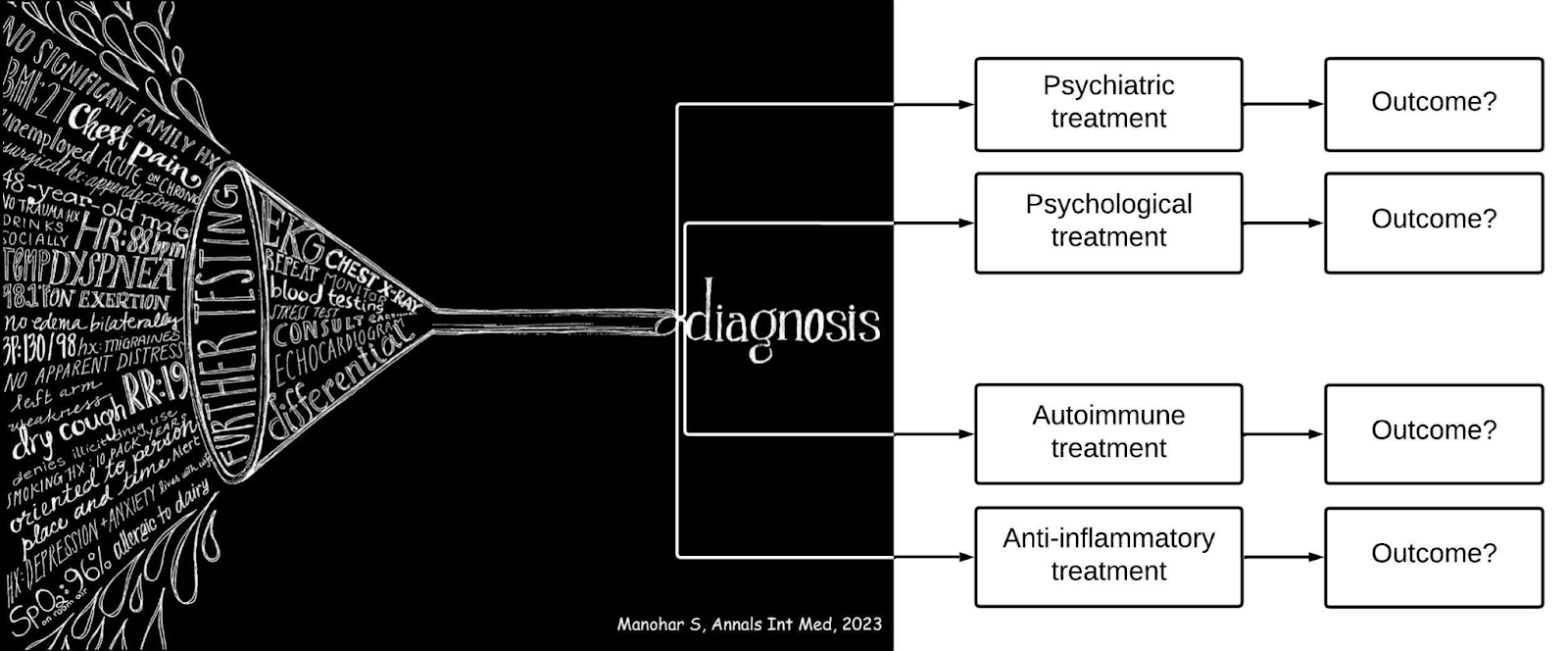

In the end, all diagnostic machine learning then is just trying to match symptoms to human ideas of what diseases and disorders are. Ideas that are informed by human biases and cultural beliefs, medical misconceptions, and scientific knowledge gaps. All of which are constantly shifting and evolving.

When it comes to illness, people want to feel better. They want to live the best quality of life they can. If a psychiatric or psychological treatment for a particular cluster of symptoms presenting in a patient with specific characteristics leads to better outcomes, great! That's some important signal there. But when patients report that a treatment approach not only doesn’t work, but further hinders their wellbeing, why not compare to treatment options that have led to better outcomes based on the patterns in medical record data?

What if rather than just symptom matching for diagnosis, we trained AI to look at patient outcomes, and reverse engineered which factors led to the best ones? If you can put all the symptoms into a machine learning engine, cross reference it against patient profiles and identify meaningful patterns, then trace those patterns to results for various treatment approaches spanning hundreds of thousands or even millions of patient records, and trillions of variables…. a construct standing in the way of the better outcomes might not add much value at all.

With the advent of digital patient records, every time we walk into a doctor’s office we are all now contributing data to an ongoing, real-time experiment testing out every treatment approach with every patient profile ever conceived. Not a clinical trial in a controlled, isolated environment, but a new kind of trial. One that’s happening out in the real world, with real patient situations and real care delivery scenarios. One where all the factors of human life and healthcare systems come into play. A trial we’ve never had the technology to run before. Until now. By looking beyond mere diagnosis, and analyzing outcomes, AI can be a tool not just for mitigating medical bias, but to truly transform medicine itself for a new millennium.

I think AI would be able to find patterns if patients' perspectives were incorporated into the medical records as a matter of course. If we can get automated appointment reminders we should also be able to get automated followup surveys: Were all of your medical concerns addressed to your satisfaction? A "maybe" answer could prompt more questions determining, for example, if the doctor prescribed a particular treatment and if so what is the expected timeline for results, and this timeline could prompt a follow-up survey at the designated later point in time asking whether the treatment is having the desired effect. A "no" answer could prompt more questions and open a space for the patient to describe problems in the session. If it's for machine learning purposes this data could be kept inaccessible to doctors except in the form of meta-data highlighting overall patterns.

What would it take to automate surveys to all patients? What would it take to legally require it as a means of protecting patients' rights?